The Linux Cut Command is a powerful utility that enables you to extract and manipulate specific parts of text files, making it an essential tool, but also a simple way for automation. Whether you’re dealing with logs, CSV files, or system outputs, the cut command allows you to quickly and efficiently extract the data you need to accomplish your tasks.

Prerequisites

Before diving into the world of the cut command, it’s important to have a basic understanding of the following concepts:

- Familiarity with the terminal or command-line interface (CLI)

- Understanding of basic file manipulation commands (such as ls, cat, and more)

Using the Cut Command

The cut command is straightforward to use, and the syntax is as follows:

cut [OPTIONS] [FILE]

where [OPTIONS] are any of the flags or parameters that you want to use, and [FILE] is the name of the file you want to manipulate.

Let’s dive into some practical use-cases to see how the cut command can be applied in real-world scenarios.

Use-Case 1: Extracting Columns from a CSV File

Suppose you have a large CSV file, and you only want to extract the first and third columns. The cut command can easily accomplish this task by using the -f (field) option, which allows you to specify the columns you want to extract. Here’s an example:

cut -f1,3 -d, file.csv

In this example, -d, specifies that the delimiter used in the file is a comma, and -f1,3 specifies that you only want to extract the first and third columns.

Use-Case 2: Extracting Data from System Output

Suppose you want to extract the IP addresses from the output of the ifconfig command. The cut command can easily accomplish this task by using the -f2 option and piping the output of the ifconfig command to the cut command:

ifconfig | cut -f2 -d:

In this example, -d: specifies that the delimiter used in the output is a colon, and -f2 specifies that you only want to extract the second field. But because ifconfig outputs differently per system, you might not get the right output. You can also try piping with grep and awk:

ip addr show | grep inet | awk '{print $2}' | cut -d/ -f1

Tips and Tricks

Here are five tips and tricks to help you get the most out of the cut command:

- Use the –

doption to specify the delimiter used in your file. - Use the –

foption to specify the fields or columns you want to extract. - Use the –

boption to specify the starting and ending positions of the data you want to extract. - Use the

--complementoption to extract all fields except the ones specified. - Use the –

soption to specify that only the lines with a specified delimiter are processed.

Challenge

Here’s a challenge for you: try using the cut command to extract the first five columns from a tab-delimited file and only include rows that have a specific word in the first column. The final output should only include the first five columns and rows that meet the specified criteria.

Hint: you’ll need to use the -f option to extract the first five columns, the -d option to specify the tab delimiter, and the grep command to filter the rows that have the specified word in the first column. Good luck!

In conclusion, the Linux Cut Command is a versatile and powerful tool that allows you to easily extract and manipulate data from text files. With its numerous options and practical use-cases, it’s a must-have tool for anyone who wants to take their command-line skills to the next level.

What Next?

If you’re interested in learning more about the Linux command-line and system administration, here are a few related topics that you might find interesting:

- The

grepcommand: The grep command is a powerful tool for searching for patterns in text files. It can be used in conjunction with the cut command to filter data. - Regular expressions: Regular expressions are a language for describing patterns in text. They are a fundamental tool for advanced text processing, and they are used by many command-line utilities, including grep and sed.

- The

sedcommand: The sed command is a stream editor that allows you to perform text transformations on a file or standard input. It can be used to perform complex text manipulations, such as replacing strings, deleting lines, and more. - The

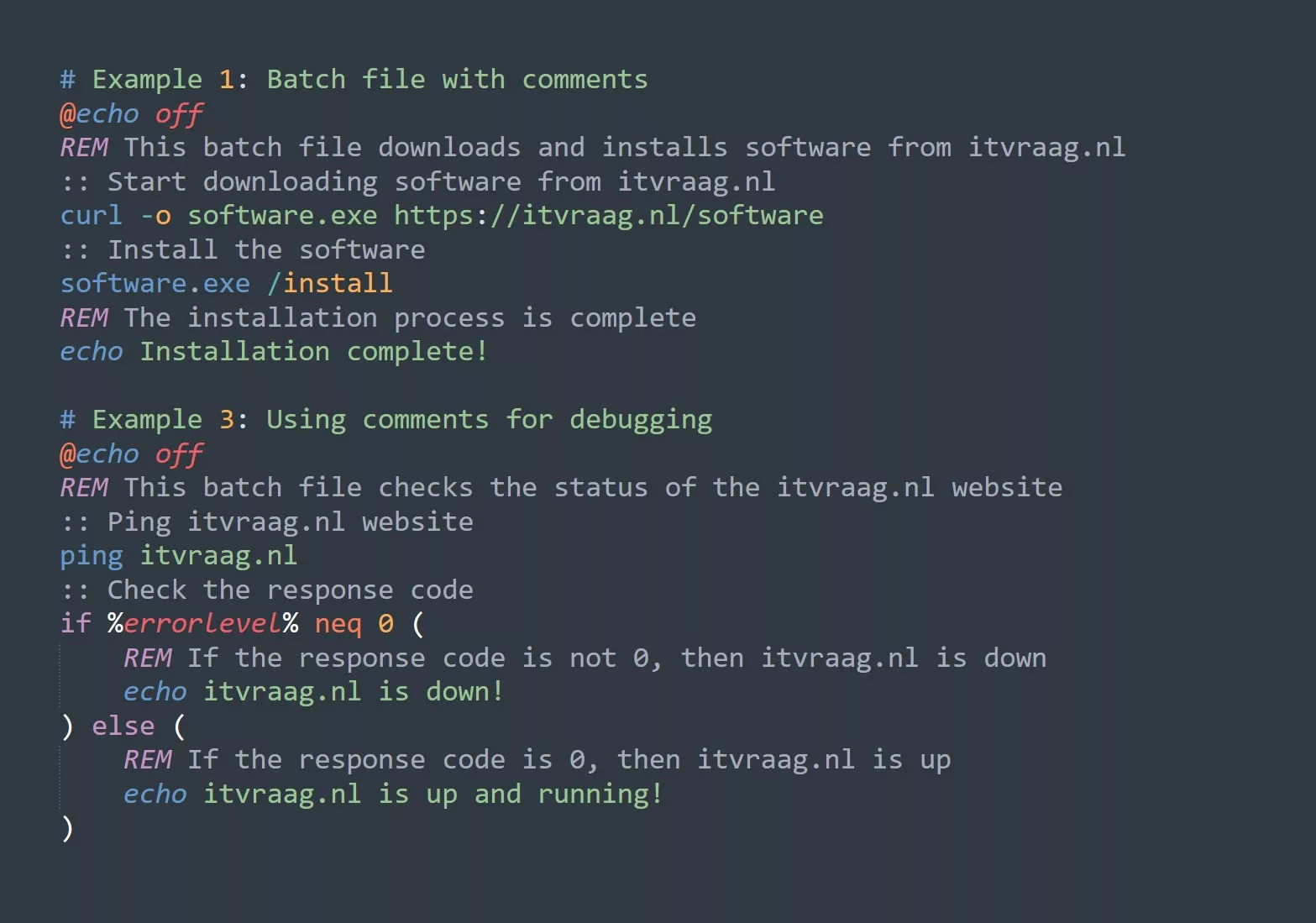

awkcommand: The awk command is a programming language for processing text files. It allows you to perform complex operations, such as calculating statistics, sorting data, and more. - Shell scripting: Shell scripting is the process of creating scripts that automate tasks on the command-line. It allows you to automate repetitive tasks and write scripts that can be executed on a variety of systems.